I saw this job posting from EyeEm, a photo sharing app / service, in which they express their wish/plan to build a search engine that can ‘identify and understand beautiful photographs’. That got me thinking about how I would approach building a system like that.

Here is how I would start:

1. Define what you are looking for

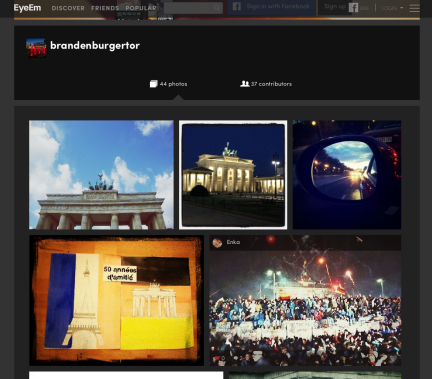

EyeEm already has a search engine based on tags and geo-location. So I assume, they want to prevent low quality pictures to appear in the results and add missing tags to pictures, based on the image’s content. One could also group similar looking pictures or rank those pictures lower which “don’t contain their tags”. For instance for the Brandenburger Tor there are a lot of similar looking pictures and even some that don’t contain the gate at all.

EyeEm already has a search engine based on tags and geo-location. So I assume, they want to prevent low quality pictures to appear in the results and add missing tags to pictures, based on the image’s content. One could also group similar looking pictures or rank those pictures lower which “don’t contain their tags”. For instance for the Brandenburger Tor there are a lot of similar looking pictures and even some that don’t contain the gate at all.

But for which concepts should one train the algo-rithms? Modern image retrieval systems are trained for hundreds of concepts, but I don’t think it is wise to start with that many. Even the most sophisticated, fine tuned systems have high error rates for most of the concepts as can be seen in this year’s results of the Large Scale Visual Recognition Challenge.

For instance the team from EUVision / University of Amsterdam, placed 6 in the classification challenge, only selected 16 categories for their consumer app Impala. For a consumer application I think their tags are a good choice:

- Architecture

- Babies

- Beaches

- Cars

- Cats (sorry, no dogs)

- Children

- Food

- Friends

- Indoor

- Men

- Mountains

- Outdoor

- Party life

- Sunsets and sunrises

- Text

- Women

But of course EyeEm has the luxury of looking at their log files to find out what their users are actually searching for.

And on a comparable task of classifying pictures into 15 scene categories a team from MIT under Antonio Torralba showed that even with established algorithms one can achieve nearly 90% accuracy [Xiao10]. So I think it’s a good idea to start with a limited number of standard and EyeEm specific concepts, which allows for usable recognition accuracy even with less sophisticated approaches.

But what about identifying beautiful photographs? I think in image retrieval there is no other concept which is more desirable and challenging to master. What does beautiful actually mean? What features make a picture beautiful? How do you quantify these features? Is beautiful even a sensibly concept for image retrieval? Might it be more useful trying to predict which pictures will be `liked` or `hearted` a lot? These questions have to be answered before one can even start experimenting. I think for now it is wise to start with just filtering out low quality pictures and to try to predict what factors make a picture popular.

2. Gather datasets

Not only do the systems need to be trained with example photographs for which we know the depicted concepts, we also need data to evaluate our system to be sure that the implemented system really works as intended. But to gather useful datasets for learning and benchmarking is one of the hardest and most overlooked tasks. To draw meaningful conclusions the dataset must consist of huge quantities of realistic example pictures with high and consistent metadata. In our case here, I would aggregate existing datasets that contain labeled images for the categories we want to learn.

For starters the ImageNet, the Scene Understanding and the Faces in the Wild databases seem usable. Additionally one could manually add pictures from Flickr, google image search and EyeEm’s users.

Apart from a rather limited dataset of paintings and pictures of nature from the Computational Aesthetics Group of the University Jena, Germany, I don’t know any good dataset to evaluate how well a system detects beautiful images. Researchers either harvest photo communities that offer peer-rated ‘beautifulness’ scores such as photo.net [Datta06] or dpchallenge.com [Poga12], or they collect photos themselves and rate the pictures themselves for visual appeal [Poga12, Tang13].

The problem with datasets harvested from photo communities is that they suffer from self selection bias, because users only upload their best shots. As a result there are few low quality shots to train the system.

Never the less I would advise to collect the data inhouse. If labeling an image as low quality takes one second, one person can label 30.000 images in less then 10h. And even if we accept that one picture has to be labeled by multiple persons to minimize unwanted subjectivity, this approach would ensure, that the system has the same notion of beauty as favored by EyeEm.

3. Algorithms to try

I would start with established techniques like the Bag of visual Words approach (BoW). As the before mentioned MIT paper describes, over 80% accuracy can already be achieved with this method for a comparable task of classifying 15 indoor and outdoor scenes [Xiao10]. While this approach originally relies on the patented SIFT feature detector and descriptor, one can choose from a whole list of new free alternatives, which deliver comparable performance while being much faster and having a lower memory footprint [Miksik2012]. In the MIT paper they also combined BoW with other established methods to increase the accuracy to nearly 90%.

The next step than would be to use Alex Krizhevsk’s implementation of a deep convolutional neural network which he used to win last year’s Large Scale Visual Recognition Challenge. The code is freely available online. While being much more powerful this system is also much harder to train, with many parameters to train with out good existing heuristics.

But these two approaches wont really help assessing the beauty of pictures or identifying the low quality ones. If one agrees with Microsoft Research’s view of photo quality, defined by simplicity, (lack of) realism and quality of craftsmanship, one could start with the algorithms they designed to classify between high quality professional photos and low quality snapshots. [Ke06]

Caveats

Specific for the case at hand I predict that the filters will cause problems. They change the colors and some of them add high and low frequency elements. This will decrease the accuracy of the algorithm. To prevent this the analysis has to be performed on the phone or the unaltered image has to be uploaded as well.

If I remember correctly I once read that EyeEm applies the filters in full resolution to pictures on their servers and downloads the result to the user’s phones afterwards. If this is still the case both approaches are feasible. But as phones get more and more powerful a system which works on the phone is to be preferred as it is inherently more scalable.

Another challenge would be to distinguish between low quality pictures and pictures that break the rules of photography on purpose. The picture on the right for example has a blue undertone, low contrast and is quite blurry. But while these features make this image special, they would also trigger the low quality detector. It will be interesting to see if machine learning algorithms can learn to distinguish between the two cases.

So to recap:

1. Make sure the use case is sound.

2. Collect loads of data to train and evaluate.

3. Start with simple, proven algorithms and increase the complexity step by step.